Building a brain that can read, part 1: Sound and sight

Executive Summary

- Reading is a relatively recent cultural invention: The human brain is not designed for reading

- In order to read, we must borrow from and build on other neural systems; we build on

- Oral language processing systems, for spoken sound processing

- Systems to connect printed letters with spoken sounds

- Visual processing areas, to perceive printed words

- Visual processing systems, to move our eyes across the text on a page

- Learning to read does not happen naturally

- Children must be taught to read

- Brains that can read must be built

The human brain is not designed for reading

Reading is a relatively recent cultural invention. Biologically, that means that the human brain is not designed for reading. That is, the brain is not endowed with an innate part that “does reading.” Indeed, from an evolutionary perspective, there has not been sufficient time to develop a part of the brain that “does reading.” And yet you are reading these words. How is that possible?

You have built a brain that can read by borrowing from, building on, and “recycling” other neural systemse.g., 1,2. Over time and with practice with reading, you and your brain have connected these systems to work together in the service of reading. For those who have not had the opportunity to do this, the social and economic costs of illiteracy, particularly for women and girls, are staggeringe.g., 3. This brief, in two parts (see also Building a brain that can read, part 2: vocabulary and meaning), considers some of the systems involved in building a brain that can read words.[1]

[1] Both parts of this brief use English as an example of an alphabetic language (a language in which printed alphabet symbols are mapped onto sounds of spoken language). Reading in all languages involves mapping printed text to speech, but at different levels (grain sizes). Both parts of this brief also refer to beginning readers as young children, but the same principles apply to older and adult beginning readers.

Spoken language processing: phonemic awareness

Phonological awareness is a sensitivity to the sound structure of spoken languagee.g., 4,5. It involves the abilities to detect, identify, and manipulate the sounds of spoken language. The smallest units of spoken language that distinguish one word from another are called phonemes. For example, the sound that the letter p makes is a phoneme in English. From here forward, I will use slashes to indicate that I am referring to sounds rather than letters; so, the sound that the letter p makes is /p/.

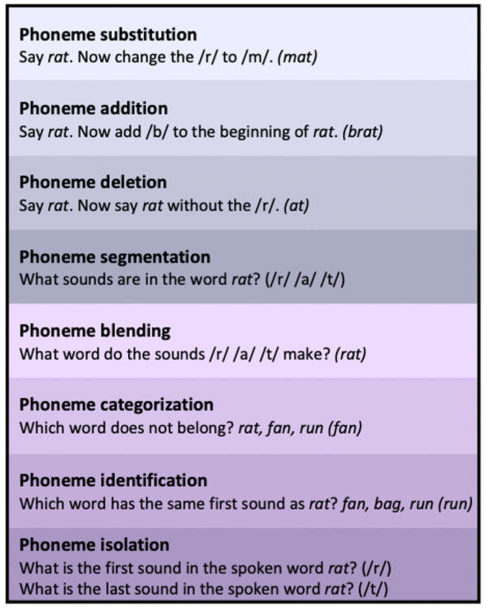

Figure 1. Some phonemic awareness tasks, with examples, from less difficult (bottom) to more difficult (top). Note that the most difficult task, phoneme substitution, relies on many of the other skills. Also recall that phonemic awareness involves sound processing, so no print is involved in these tasks – only spoken words and sounds.

Phonological awareness at the level of phonemes is called phonemic awareness. For example, knowing which of the spoken words mop, car, and mat start with the same sound reflects phonemic awareness. So does being able to blend the separated sounds /m/ /a/ /t/ into the spoken word mat. And so does being able to substitute /b/ for /m/ in the spoken word mat to create the new spoken word bat. Phonemic awareness is one of the best predictors of learning to read in alphabetic languagese.g., 6,7. Indeed, phonemic awareness is predictive of reading ability throughout the school years, from kindergarten through grade 128.[2] Explicitly teaching students to recognize and manipulate phonemes (for example, through isolation and matching, blending, and substitution games, as in the examples above) is considered best practice based on strong research evidencee.g., 10,11, p. 2. Figure 1 summarizes some phonemic awareness tasks.

[2] In particular, the linguistic nature and phonological complexity of the stimuli, along with the requirement to produce a verbal response, are components of phonemic awareness tasks that have been related to later reading ability (in terms of decoding)9.

Unfortunately, parsing spoken words into phonemes is not easy. For example, consider the spoken word box. How many and which phonemes are in this word? There are four phonemes in the spoken word box: /b/ /o/ /k/ /s/. If you were mistaken in your phonemic analysis, you are not alone. Try this one: How many and which phonemes are in the spoken word shoe? There are only two phonemes in shoe: /sh/ and /oo/. Many pre-service and in-service teachers are unable to perform phoneme counting and manipulation tasks, such as these phoneme segmentation examples, accuratelye.g., 12,13,14. It is difficult for teachers to instruct young children about the phonemes in spoken words without an adequate understanding of phonemes themselves. Luckily, there are resources to help teachers improve their phonemic awareness and instructione.g., 11,15,16.

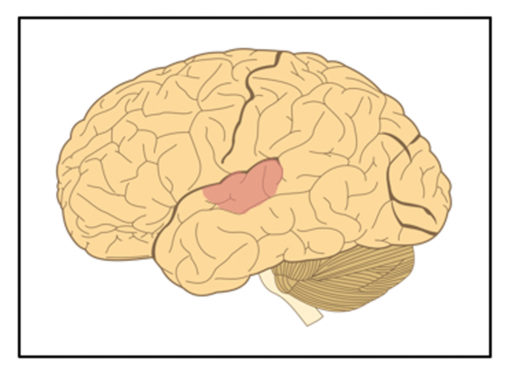

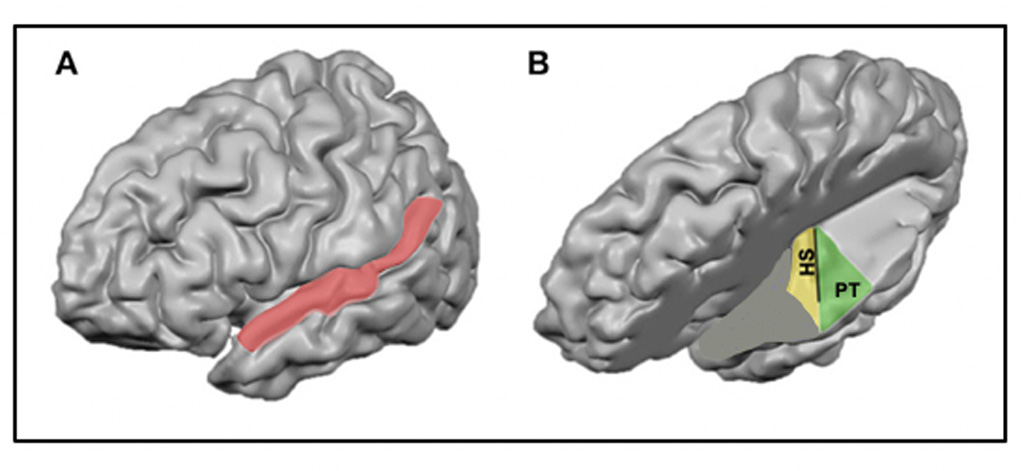

Figure 2. A left hemisphere view of the human brain with the posterior superior temporal region shaded pink. Modified from Hugh Geiney (shading added) on Wikimedia Commons, CC BY-SA

Spoken language is processed across many regions in the brain. For example, one area at the top of the temporal lobe (called the posterior superior temporal gyrus/sulcus, see Figure 2) is specialized for speech processinge.g., 17. In adults listening to speech, specific clusters of neurons in this region are activated by specific speech sounds, like /p/ or /m/18,19. That is, this region encodes and processes spoken language at the level of phonemes. This same superior temporal region is also active during silent reading in fluent readers – when there is no spoken sound input from the external environmente.g., 20,21. Thus, we borrow from the spoken language processing system in the service of readinge.g., 22.

But there is even more to this story: This language processing system is fundamentally altered in the course of learning to reade.g., 23,24,25. That is, learning to read actually changes the way that speech is processed in the brain. After learning to read, “speech processing automatically involves breaking up the speech sound into constituent phonemes… Language is never the same again”26, pp. 1010-1011. After just one year of reading instruction, activation levels in the superior temporal region are increased when children listen to spoken language27. That children can identify the same sound /m/ in the spoken words mat and small and jam is the result of learning to read. In alphabetic languages, learning to read is what allows us to process speech at the phoneme level, and what reorganizes the neural language processing systems underlying this skill2.

Connecting speech to print: decoding

So learning to read in alphabetic languages involves becoming aware of phonemes in speech. It also involves knowledge of letters and letter combinations in print.[3] Crucially, it further involves realizing that these two things are related: that the sounds of language map onto the written letters. The alphabetic principle is the understanding that there are specific relations between spoken sounds and printed letters. Reading instruction and phonological awareness are mutually reinforcing because “phonological awareness helps children to discover the alphabetic principle… [and] learning to read alphabetic script also develops phonological and phonemic awareness” 28, p. 9. The job of the beginning reader is to learn which letters go with which sounds, called grapheme-phoneme correspondences.

[3] For information about the development of letter knowledge, see the brief in this series Emergent literacy: building a foundation for learning to read.

Learning the mappings between letters (graphemes) and sounds (phonemes) is the basis of decoding in beginning reading. Decoding is the effortful process of considering each letter in a printed word, mapping it to a sound, and then blending the sounds together in order to read the word. For example, in beginning reading, the written word caton the page is read as /kuh/ /ahh/ /tuh/ and then those sounds are blended together into the spoken word cat. Letter-by-letter decoding depends in part on verbal short-term memory, which is predictive of beginning word-level reading29.

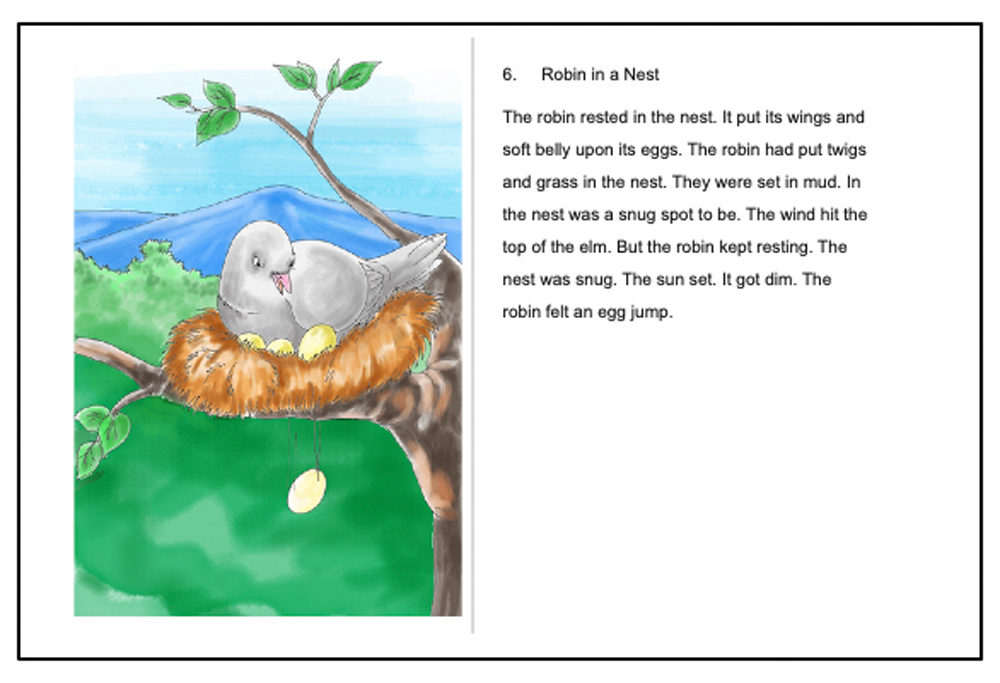

Figure 3. An example of a decodable text (fiction). From http://www.freereading.net/w/images/f/f5/Decodable_Fiction_6.pdf, CC-BY SA 3.0 US

Decodable texts are books that are designed to provide ample practice with sounding out pattern-based words (for example, cat, hat, mat, sat, bat) and are often used in phonics curricula.[4] See Figure 3 for an example. Phonics methods of teaching reading focus on building grapheme-phoneme correspondence knowledge in an explicit, systematic, and structured way and are considered best practice in teaching beginning reading in alphabetic languagese.g., 10,31. There is strong evidence in favor of teaching students to decode in beginning reading in order to recognize single words, as a foundational skill to support reading for understanding11.

[4] Decodable texts expose children to repeated letter-sound patterns (for example, -at) so that, with practice, beginning readers will eventually recognize the pattern and not have to sound out each element (for example, /at/ rather than /a/ /t/). Because of the controlled (and therefore limited) vocabulary and storyline in decodable texts, teachers may want to consider supplementation with other narrative and informational texts that can be read with support or read aloud by the teacher. Such texts can facilitate wider exposure to vocabulary and may increase interest in and motivation for reading30.

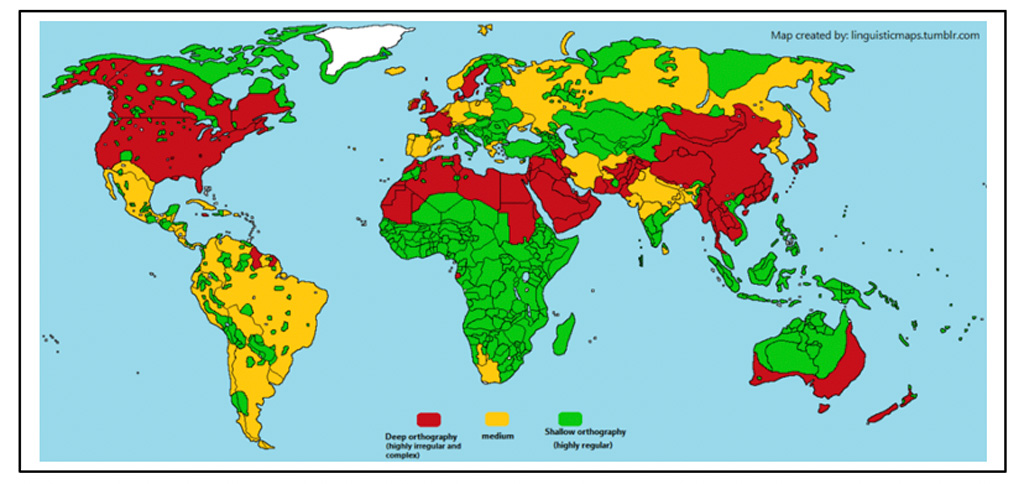

Figure 4. Orthographic depth, plotted as three levels although a spectrum in reality, for languages across the globe. Attribution: R. Pereira, https://linguisticmaps.tumblr.com/post/187856489343/orthographic-depth-languages-have-different-levels

How quickly children learn to decode and read words depends on the nature of the language involved e.g., 32,33,34. This is because the regularity of grapheme-phoneme correspondences varies across languages. This regularity, or lack thereof, is referred to as the orthographic depth of a language; orthographic depth information is plotted worldwide in Figure 4. When the mappings are very consistent (a given grapheme almost always maps to the same phoneme), as in languages with a shallow orthography such as Italian, children can learn all the correspondences that will apply to all words within the first year (or even months) of instruction. But when the mappings are less consistent (a given grapheme may map to multiple phonemes, such as a c in English mapping to either /k/ or /s/, as in cat or city) in languages with a deep orthography, it takes more time to learn. For example, children learning to read in English take at least two more years of training to read at the same level as children learning to read in Italian32.

Figure 5. (A) A left hemisphere view of the human brain with the superior temporal region shaded pink. (B) An internal view from the top of the left temporal lobe with parietal areas removed to reveal Heschl’s sulcus (HS) and the planum temporale (PT). Modified from Michelle Moerel, Federico De Martino, and Elia Formisano, 2014, Wikimedia Commons, CC-BY 3.0

In both children and adults, visual letter and auditory speech sound information are integrated in neural regions along the top of the temporal lobe35-38. The superior temporal sulcus and planum temporale/Heschl’s sulcus regions are tucked into the folds at the top of the temporal lobe, as illustrated in Figure 5. These are the regions that encode grapheme-phoneme correspondences as children learn to read. Although which letters go with which sounds may be learned within months in consistent languages, developing from building associations to automatically integrating letters and sounds into new audio-visual neural representations may take years of reading experience37,39.

Visual processing: perceiving words

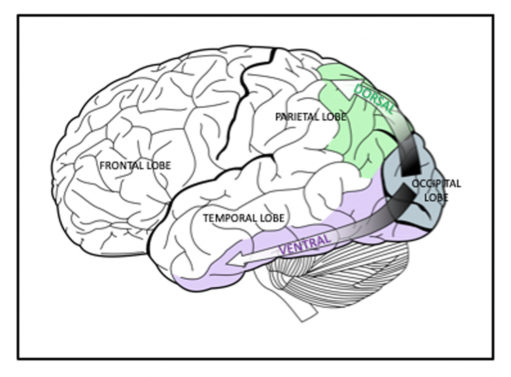

Figure 6. The ventral (purple shading) and dorsal (green shading) visual processing streams (labels added). Selket/Wikimedia Commons, GNU Free Documentation License, CC BY-SA 3.0

The ventral visual pathway, which travels from the occipital lobe along the bottom of the temporal lobe (see Figure 6), is specialized for processing texture, color, pattern, form, and fine detaile.g., 40. These characteristics of any incoming visual information are processed along this pathway. The neural processing of letters and words borrows heavily from the specializations of this part of the visual system. For example, the fine detail that distinguishes a G from a C, the form of the letter B as one vertical line and two curves in a specific arrangement, and the patterns of graphemes that comprise meaningful sequences, like cat or -ing, all capitalize on the specialties of this pathway.

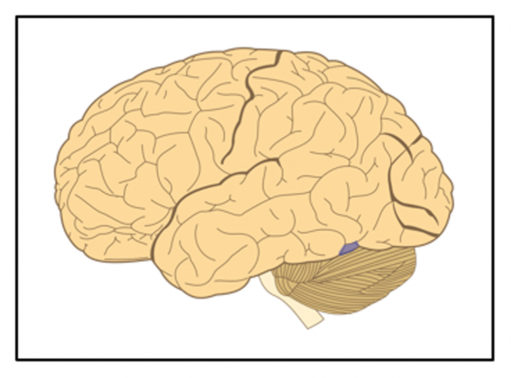

Reading not only borrows from the ventral visual pathway but also builds on and changes it. In an early study, researchers presented adult fluent readers with four types of stimuli: real words (for example, ANT), made-up words (for example, GEEL), letter strings (for example, VSHFFT), and strings of letter-like symbols41. In comparing the neural responses to these different types of stimuli, the researchers noticed one area along the ventral visual pathway that was active only for the real and made-up words. As these were the stimuli that visually took the form of words in English, the researchers called the area the “visual word form area.” This was one of the first neuroimaging reports of a brain region specialized for processing visual objects that took the form of – “looked like” – words. This tiny bit of cortex seemed to be tuned to the orthography of words (see Figure 7). This finding has since been replicated many times, and the visual word form area has consistently been associated with automatic orthographic word processing in fluent readerse.g., 42,43-46.

Figure 7. A left hemisphere view of the human brain with the approximate location of the visual word form area shaded purple. Modified from Hugh Geiney (shading added) on Wikimedia Commons, CC BY-SA

The visual word form area is not activated by print in people who cannot read2. Learning to read drives the development and specialization of the visual word form area. This region becomes increasingly tuned to words with reading experiencee.g., 42,44. Essentially, the visual word form area takes shape within the ventral visual pathway with increasing expertise in readinge.g., 44,47.[5] It starts to specialize with growing letter knowledge, letter-sound knowledge, and decoding abilitiese.g., 47,49,50. Indeed, activation levels in the visual word form area are associated with decoding ability in readers from age 7 to age 18e.g., 51. It follows that specialization of the visual word form area for automatic orthographic word processing extends beyond late elementary schoole.g., 52,53 through adolescencee.g., 54.

[5] Similar to other areas along the ventral visual pathway becoming specialized for birds in expert birdwatchers or cars in car experts48.

Visual processing: eye movements

The dorsal visual pathway, which travels from the occipital lobe through the posterior temporal lobe up into the parietal lobe (refer to Figure 6), is specialized for motion processing and depth perceptione.g., 40. The contribution of this visual processing pathway to reading might be less obvious, given that there is nothing that moves on a standard page of text and print has no depth. However, the reader’s eyes do need to move across the text on a page, in a carefully calibrated and coordinated fashion. For example, trying to read English from right to left or skipping every other line of text will certainly create problems for understanding. Because of its primary specialties, the dorsal visual pathway is also involved in oculomotor control (eye movements)e.g., 55. So learning to read borrows from the dorsal visual processing pathway, as well.

Figure 8. A cartoon of a sentence read with fixations (blue dots, when the eyes are briefly stopped) and saccades (orange lines, when the eyes are moving across the text) marked. For skilled readers, fixations last about 200-250 milliseconds and average saccade length is 7 to 9 letter spaces. For beginning readers, fixations can last over 300 milliseconds and average saccade length is much shorter, sometimes only 1 or 2 letter spaces. Fixation and saccade length also depend on the familiarity and complexity of the text.

Readers’ eyes move across the page in a series of stops and jumps. The stops are called fixations, periods during which the eyes are relatively still, and the jumps are called saccades, periods when the eyes are moving to the next fixatione.g., 56. This is illustrated in Figure 8. Watching a beginning readers’ eye movements shows that the skills involved in fluidly moving one’s eyes across the text on a page take some time to develop! In comparison to fluent readers, beginning readers have longer fixations (their eyes stay in one place on the page longer), shorter saccades (they move their eyes to the next fixation by only a letter or two, rather than seven to nine as in fluent readers), and more regressions (they return their eyes more often to look at text already viewed)e.g., 56,57,58. On average, “it takes beginning readers two fixations to identify a word, whereas adult readers only need one fixation”58, p. 232. It is important to note that letters that are not fixated are still seen, within what is called the perceptual spane.g., 58.[6]Every letter on the page is visually processed during reading. With reading practice, eye movements during reading become more adult-like. This likely occurs as the dorsal visual system learns “the specialized eye movements and visual attention patterns needed for reading”60, p. 72. Thus, in learning to read, we both borrow from and change processing in the dorsal visual pathway.

[6] As a fluent reader, your perceptual span allows you to see 7 to 9 letter spaces to the right of your fixation, although not as clearly as the letters within fixation, as you are reading in an alphabetic language that is read left-to-right, like English. In alphabetic languages that are read right-to-left, the perceptual span extends the same distance to the left as fluent readers are reading59.

Conclusion, part 1

Because the brain is not designed for reading, learning to read does not happen naturally, without instructioncf. 61. Put another way, reading is not innate and children must learn the systematic relations between speech sounds and visual symbols in their language(s): Children must be taught to read. It follows that learning to read is not only a set of technical skills but also a social practice, situated in a cultural context of schooling and other learning environments62.

At the start of formal instruction, learning to read in alphabetic languages depends on structured experiences with the sounds of language (phonemes) and printed letters and words (graphemes), understanding of the alphabetic principle, and lots of practice mapping graphemes to phonemes until words can be recognized and read automatically.[7] As discussed in this brief, these experiences borrow from, build on, and reshape multiple processing networks in the brain. Instruction must address all of these skills in order to cultivate a child who can read, and all of these neural networks in order to build a brain that can read.

[7] It follows that basic vision and hearing screenings are recommended well before formal learning to read commences; if a child cannot see the graphemes clearly or hear the phonemes clearly, the process will be compromised.

Yet learning to read words involves even more; many processes must happen in concert. Some other important aspects of beginning reading are discussed in the second part of this brief, Building a brain that can read, part 2: vocabulary and meaning.

References

- Dehaene, S. Reading in the brain: the science and evolution of a human invention. (Viking, 2009).

- Dehaene, S. et al. How learning to read changes the cortical networks for vision and language. Science 330, 1359-1364, doi:10.1126/science.1194140 (2010).

- World Literacy Foundation. The economic and social costs of illiteracy. (World Literacy Foundation, Melbourne, Australia, 2018).

- Treiman, R. The foundations of literacy. Current Directions in Psychological Science 9, 89-92, doi:10.1111/1467-8721.00067 (2000).

- Anthony, J. L. & Francis, D. J. Development of phonological awareness. Current Directions in Psychological Science 14, 255-259, doi:10.1111/j.0963-7214.2005.00376.x (2005).

- Høien, T., Lundberg, I., Stanovich, K. E. & Bjaalid, I.-K. Components of phonological awareness. Reading and Writing 7, 171-188, doi:10.1007/BF01027184 (1995).

- Melby-Lervåg, M., Halaas, S.-A. H. & Hulme, C. Phonological skills and learning to read: a meta-analytic review. Psychol. Bull. 138, 322-352, doi:10.1037/a0026744 (2012).

- Calfee, R. C., Lindamood, P. & Lindamood, C. Acoustic-phonetic skills and reading – Kindergarten through twelfth grade. J. Educ. Psychol. 64, 293-298, doi:10.1037/h0034586 (1973).

- Cunningham, A. J., Witton, C., Talcott, J. B., Burgess, A. P. & Shapiro, L. R. Deconstructing phonological tasks: the contribution of stimulus and response type to the prediction of early decoding skills. Cognition 143, 178-186, doi:10.1016/j.cognition.2015.06.013 (2015).

- National Institute of Child Health and Human Development. Report of the National Reading Panel. Teaching children to read: an evidence-based assessment of the scientific research literature on reading and its implications for reading instruction (NIH Publication No. 00-4769). (U.S. Government Printing Office, Washington, DC, 2000).

- Foorman, B. et al. Foundational skills to support reading for understanding in kindergarten through 3rd grade. (National Center for Education Evaluation and Regional Assistance (NCEE), Institute of Education Sciences, U.S. Department of Education, Washington, DC, 2016).

- Bos, C., Mather, N., Dickson, S., Podhajski, B. & Chard, D. Perceptions and knowledge of preservice and inservice educators about early reading instruction. Annals of Dyslexia 51, 97-120, doi:10.1007/s11881-001-0007-0 (2001).

- Cheesman, E. A., McGuire, J. M., Shankweiler, D. & Coyne, M. First-year teacher knowledge of phonemic awareness and its instruction. Teacher Education and Special Education 32, 270-289, doi:10.1177/0888406409339685 (2009).

- Stainthorp, R. Use it or lose it. Literacy Today, 16-17 (2003).

- Moats, L. C. Speech to print: language essentials for teachers. (Paul H. Brookes, 2000).

- Lindamood, P., Bell, N. & Lindamood, P. Sensory-cognitive factors in the controversy over reading instruction. Journal of Developmental and Learning Disorders 1, 143-182 (1997).

- Binder, J. R. et al. Human temporal lobe activation by speech and nonspeech sounds. Cereb. Cortex 10, 512-528, doi:10.1093/cercor/10.5.512 (2000).

- Mesgarani, N., Cheung, C., Johnson, K. & Chang, E. F. Phonetic feature encoding in human superior temporal gyrus. Science 343, 1006-1010, doi:10.1126/science.1245994 (2014).

- Chang, E. F. et al. Categorical speech representation in human superior temporal gyrus. Nat. Neurosci. 13, 1428-1433, doi:10.1038/nn.2641 (2010).

- Joubert, S. et al. Neural correlates of lexical and sublexical processes in reading. Brain Lang. 89, 9-20, doi:10.1016/S0093-934X(03)00403-6 (2004).

- Simos, P. G. et al. Brain mechanisms for reading: the role of the superior temporal gyrus in word and pseudoword naming. Neuroreport 11, 2443-2447, doi:10.1097/00001756-200008030-00021 (2000).

- Moats, L. C. Speech to print: language essentials for teachers. 2nd edn, (Paul H. Brookes, 2010).

- Brennan, C., Cao, F., Pedroarena-Leal, N., McNorgan, C. & Booth, J. R. Reading acquisition reorganizes the phonological awareness network only in alphabetic writing systems. Hum. Brain Mapp. 34, 3354-3368, doi:10.1002/hbm.22147 (2013).

- Castro-Caldas, A., Petersson, K. M., Reis, A., Stone-Elander, S. & Ingvar, M. The illiterate brain: Learning to read and write during childhood influences the functional organization of the adult brain. Brain 121, 1053-1063, doi:10.1093/brain/121.6.1053 (1998).

- Nation, K. & Hulme, C. Learning to read changes children’s phonological skills: evidence from a latent variable longitudinal study of reading and nonword repetition. Developmental Science, doi:10.1111/j.1467-7687.2010.01008.x (2010).

- Frith, U. Literally changing the brain. Brain 121, 1011-1012, doi:10.1093/brain/121.6.1011 (1998).

- Monzalvo, K. & Dehaene-Lambertz, G. How reading acquisition changes children’s spoken language network. Brain Lang. 127, 356-365, doi:10.1016/j.bandl.2013.10.009 (2013).

- Pang, E. S., Muaka, A., Bernhardt, E. B. & Kamil, M. L. Teaching reading. Education Practices Series—12. (International Bureau of Education, International Academy of Education, Geneva, Switzerland, 2003).

- Cunningham, A. J., Burgess, A. P., Witton, C., Talcott, J. B. & Shapiro, L. R. Dynamic relationships between phonological memory and reading: a five year longitudinal study from age 4 to 9. Developmental Science, 1-18, doi:10.1111/desc.12986 (2020).

- Templeton, S. & Gehsmann, K. M. Teaching reading and writing: the developmental approach. (Pearson, 2014).

- National Research Council. Preventing reading difficulties in young children. (National Academy Press, 1998).

- Seymour, P. H. K., Aro, M. & Erskine, J. M. Foundation literacy acquisition in European orthographies. Br. J. Psychol. 94, 143-174, doi:10.1348/000712603321661859 (2003).

- Ziegler, J. C. & Goswami, U. Becoming literate in different languages: similar problems, different solutions. Developmental Science 9, 429-453 (2006).

- Caravolas, M., Lervåg, A., Defior, S., Málková, G. S. & Hulme, C. Different patterns, but equivalent predictors, of growth in reading in consistent and inconsistent orthographies. Psychological Science 24, 1398-1407, doi:10.1177/0956797612473122 (2013).

- Blau, V. et al. Deviant processing of letters and speech sounds as proximate causes of reading failure: a functional magnetic resonance imaging study of dyslexic children. Brain 133, 868-879, doi:10.1093/brain/awp308 (2010).

- van Atteveldt, N., Formisano, E., Goebel, R. & Blomert, L. Integration of letters and speech sounds in the human brain. Neuron 43, 271-282, doi:10.1016/j.neuron.2004.06.025 (2004).

- Blomert, L. The neural signature of orthographic-phonological binding in successful and failing reading development. Neuroimage 57, 695-703, doi:10.1016/j.neuroimage.2010.11.003 (2011).

- Richlan, F. The functional neuroanatomy of letter-speech sound integration and its relation to brain abnormalities in developmental dyslexia. Frontiers in Human Neuroscience 13, 1-8, doi:10.3389/fnhum.2019.00021 (2019).

- Froyen, D. J. W., Bonte, M. L., van Atteveldt, N. & Blomert, L. The long road to automation: neurocognitive development of letter-speech sound processing. J. Cogn. Neurosci. 21, 567-580, doi:10.1162/jocn.2009.21061 (2009).

- Livingstone, M. & Hubel, D. Segregation of form, color, movement, and depth: anatomy, physiology, and perception. Science 240, 740-749, doi:10.1126/science.3283936 (1988).

- Petersen, S. E., Fox, P. T., Posner, M. I., Mintun, M. & Raichle, M. E. Positron emission tomographic studies of the cortical anatomy of single-word processing. Nature 331, 585-589, doi:10.1038/331585a0 (1988).

- Cohen, L. & Dehaene, S. Specialization within the ventral stream: the case for the visual word form area. Neuroimage 22, 466-476, doi:10.1016/j.neuroimage.2003.12.049 (2004).

- Cohen, L. et al. Language-specific tuning of visual cortex? Functional properties of the Visual Word Form Area. Brain 125, 1054-1069, doi:10.1093/brain/awf094 (2002).

- McCandliss, B. D., Cohen, L. & Dehaene, S. The visual word form area: expertise for reading in the fusiform gyrus. Trends in Cognitive Sciences 7, 293-299, doi:10.1016/S1364-6613(03)00134-7 (2003).

- Glezer, L. S., Jiang, X. & Riesenhuber, M. Evidence for highly selective neuronal tuning to whole words in the “visual word form area”. Neuron 62, 199-204, doi:10.1016/j.neuron.2009.03.017 (2009).

- Dehaene, S. & Cohen, L. The unique role of the visual word form area in reading. Trends in Cognitive Sciences 15, 254-262, doi:10.1016/j.tics.2011.04.003 (2011).

- Wandell, B. A., Rauschecker, A. M. & Yeatman, J. D. Learning to see words. Annu. Rev. Psychol. 63, 31-53, doi:10.1146/annurev-psych-120710-100434 (2012).

- Gauthier, I., Skudlarski, P., Gore, J. C. & Anderson, A. W. Expertise for cars and birds recruits brain areas involved in face recognition. Nat. Neurosci. 3, 191-197, doi:10.1038/72140 (2000).

- Brem, S. et al. Brain sensitivity to print emerges when children learn letter-speech sound correspondences. Proceedings of the National Academy of Sciences 107, 7939-7944, doi:10.1073/pnas.0904402107 (2010).

- Centanni, T. et al. Early development of letter specialization in left fusiform is associated with better word reading and smaller fusiform face area. Developmental Science 21, e12658, doi:10.1111/desc.12658 (2018).

- Shaywitz, B. A. et al. Disruption of posterior brain systems for reading in children with developmental dyslexia. Biol. Psychiatry 52, 101-110, doi:10.1016/S0006-3223(02)01365-3 (2002).

- Coch, D. & Meade, G. N1 and P2 to words and wordlike stimuli in late elementary school children and adults. Psychophysiology 53, 115-128, doi:10.1111/psyp.12567 (2016).

- Eddy, M. D., Grainger, J., Holcomb, P. J., Mitra, P. & Gabrieli, J. D. E. Masked priming and ERPs dissociate maturation of orthographic and semantic components of visual word recognition in children. Psychophysiology 51, 136-141, doi:10.1111/psyp.12164 (2014).

- Brem, S. et al. Evidence for developmental changes in the visual word processing network beyond adolescence. Neuroimage 29, 822-837, doi:10.1016/j.neuroimage.2005.09.023 (2006).

- Boden, C. & Giaschi, D. M-stream deficits and reading-related visual processes in developmental dyslexia. Psychol. Bull. 133, 346-366, doi:10.1037/0033-2909.133.2.346 (2007).

- Rayner, K., Foorman, B. R., Perfetti, C. A., Pesetsky, D. & Seidenberg, M. S. How psychological science informs the teaching of reading. Psychological Science in the Public Interest 2, 31-74, doi:10.1111/1529-1006.00004 (2001).

- Blythe, H. I. Developmental changes in eye movements and visual information encoding associated with learning to read. Current Directions in Psychological Science 23, 201-207, doi:10.1177/0963721414530145 (2014).

- Rayner, K. Eye movements and the perceptual span in beginning and skilled readers. J. Exp. Child Psychol.41, 211-236, doi:10.1016/0022-0965(86)90037-8 (1986).

- Jordan, T. R. et al. Reading direction and the central perceptual span: evidence from Arabic and English. Psychonomic Bulletin & Review 21, 505-511, doi:10.3758/s13423-013-0510-4 (2014).

- Wandell, B. A. The neurobiological bases of seeing words. Ann. N. Y. Acad. Sci. 1224, 63-80, doi:10.1111/j.1749-6632.2010.05954.x (2011).

- Goodman, K. S. & Goodman, Y. M. Learning to read is natural. In Theory and practice of early reading Vol. 1 (eds L.B. Resnick & P.A. Weaver) 137-154 (Erlbaum, 1979).

- Street, B. Learning to read from a social practice view: ethnography, schooling and adult learning. Prospects46, 335-344, doi:10.1007/s11125-017-9411-z (2016).